This was the part of my course subject Information Retrieval And Extraction during my Masters. It was selected as one of the best projects for the same course. So, I want to share some information about the Implementation and Research Perspective(may be some people can expand the idea). I have done some kind of studies and embodied some new features into Summarization of the blogs on the basis of Comments.

Word of Caution: The above Proposed Method can be useful only for the blogs that have some proper Informative comments, for example, TechCrunch.com blogs.

Now lets start with understanding the Title itself,

What is Summarization of a Text?

Lets say, we are having a Document(Text) consisting some Sentences. Now, the task of Summarization is to extract a subset of sentences from D, denoted by Sc (Sc ⊂ D), that best represents the topic(s) presented in D.

But in Comment Oriented Text/Blog summarization, we make additional use of Comments that ultimately, increases the efficiency of the output.

I hope, you may got the abstract of what we have to implement.

Scenario Formation

Lets consider a scenario where we have a text of 100 sentences on Java and now we have summarize it. But one may think about how many Sentences or Words are expected in the output summarized text ?

Usually, 20% ( 20 sentences ) of the input text sentences are expected in the summary. This percentage is not standard but it is a subjective matter. It would be better to have lesser sentences in the output so as to evaluate your algorithm in a more precise manner. One thing should be noted here that we are not going to generate new sentences or information but just extracting the sentences from the input text that gives the central Idea about it. I genuinely suggest you not to go by No. of Words in the output summary concept as it may be the situation where you have to end up with incomplete meanings. Yes, this concept may be useful where you are going to generate new information keeping in mind the comments. But this approach involves checking of grammar, fluency of the generated language, concept representation, etc. Okay, does not matter we are going to generate summary on the basis of No. of sentences.

What’s the use of Comments ?

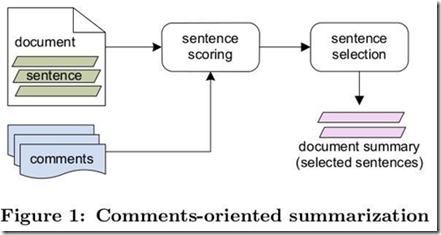

Considerable amount of research has been done till now on Text Summarization without considering Comments. Results were pretty good but not up to the mark as they fail to extract those sentences that may represent the central theme of the text. The main reason of including the comments into summarization is that most of the times they highly discuss the central Idea about the subject of the Text. But blog or text must have considerable no. of comments for better results. Consider the following figure ( Ref: Meishan Hu, Aixin Sun, and Ee-Peng Lim ) that gives the overview about the same.

I guess, you may have got the overview of what is Expected and how comments can be used to achieve that.

The Algorithm is as follows:

Phase I: Finding Dominant Comments that may represent Central Theme using

- Topic Graph

- Mention Graph

- Named Entity Recognition

Phase II: Extracting Sentences on the basis of Dominant Comments from Phase I

Phase III: Finding the Quality of the Summary

Now, we will see each phase in details:

Phase I:

We know that all the comments are not useful enough to be used in the process of extraction of central theme about a blog. Let each comment has unique IDs from 1 to N.

Now, lets see some Graphs that can be further utilised in our algorithm.

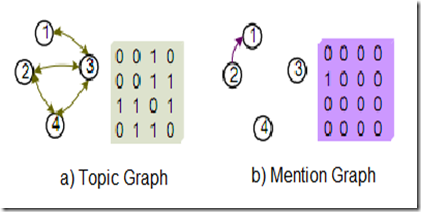

i) Topic Graph

It shows how the comments are topically/contextually related with each other. That there is an edge between the two nodes iff they are topically related to each other. We can represent this graph using various Data Structures for example, 2D Array(Matrix), Array of Linked List, etc.

But how can we find whether two comments are topically related with each other or not ?

For that purpose, we can make use of Cosine Similarity. We are not going into deep about Cosine Similarity but you can refer Wikipedia page which explained the concept very well. It gives the angle between the lines drawn from two comments taken into consideration on the basis of common words. Lesser the angle more the similarity between the comments.

ii) Mention Graph

We may have reply from some other user to a comment. So, we can consider that such kind of comments are more related with each other. In Mention Graph, we consider only those comments that got one or more reply/ies from other users. There is an edge between X & a in the Mention Graph iff comment X got reply from comment a.

We can assign weightage to each graph. It would be better if we assign more weightage to Mention Graph than Topic, so that more dominant comments may get more scores. We have to see the generated output summary by varying these weightages. Make the weightages as constant after getting peak results.

For example, consider the second row from both the figures (a) & (b) for comment ID 2.

Topic: 0 0 1 1 = 0 + 0 + 1 + 1 = 2 * weightage(0.4) = 0.8

Mention: 1 0 0 0 = 1 + 0 + 0 + 0 = 1 * weightage(0.6) = 0.6

Therefore, Intermediate Score of comment 2 = 0.8 + 0.6 = 1.4

In this way, we calculate the Intermediate Scores of all the comments.

iii) Named Entity Recognition (NER)

I have read three research papers about Summarization using comments. But this feature is new that I added so as to produce better results. Consider our previous scenario about Java. As blog is about Java, it is obvious that Java may get compared with Python within the comments but there is nothing about Python in the blog text. Now, in this scenario, we may get dominant comments that may not be more related with the contents of the blog/text. For avoiding such issues, we consider Named Entities. Firstly, we will find out all the Nouns from the content of the blog known as BlogNounSet. After that, we have to find NERScore each comment on the basis of no. of nouns it contains from BlogNounSet. Then we merge the scores i.e. Intermediate Score and NERScore to get FinalScore for each comment by assigning proper weightages. For finding Named Entities, we can make use of Stanford POS Tagger.

After obtaining FinalScore for each comment, we have to select certain no. of dominant comments. This is the end of Phase I.

Phase II:

Now, we are having a set of all Dominant Comments (DC). In this phase, we have to extract sentences that are similar to the DC and hence we make use of Cosine Similarity. This phase is as follows:

For each sentence s from set of sentences S in the blog text

total[s] = 0

For each sentence s from S

For each comment c from DC

total[s] += Cosine_similarity( s, c )

end of inner for loop

end for outer for loop

sort( total ) // Sort in non-decreasing order

Print first n Sentences where n = 20% of S

Phase III:

Now, we got the summary in Phase II, but we must have something to evaluate whether our system generated summary is better or not. For that purpose, we use Kavita Ganeshan Rouge. Its a Perl script to evaluate our system generated summary by taking into consideration various manual generated summaries as benchmark. It makes use of n-grams for evaluation. In this way, we can check the performance of our Algorithm.

I hope you all EnJoY this post and please don’t forget to write comments or mails of appreciation. Your remarks are welcome.

Thanks a lot !!!!!!

References:

Comments-Oriented Document Summarization: Understanding Documents with Readers’ Feedback By Meishan Hu, Aixin Sun, and Ee-Peng Lim

Comments-Oriented Blog Summarization by Sentence Extraction By Meishan Hu, Aixin Sun and Ee-Peng Lim

Summarizing User-Contributed Comments By Elham Khabiri and James Caverlee and Chiao-Fang Hsu